Our Approach

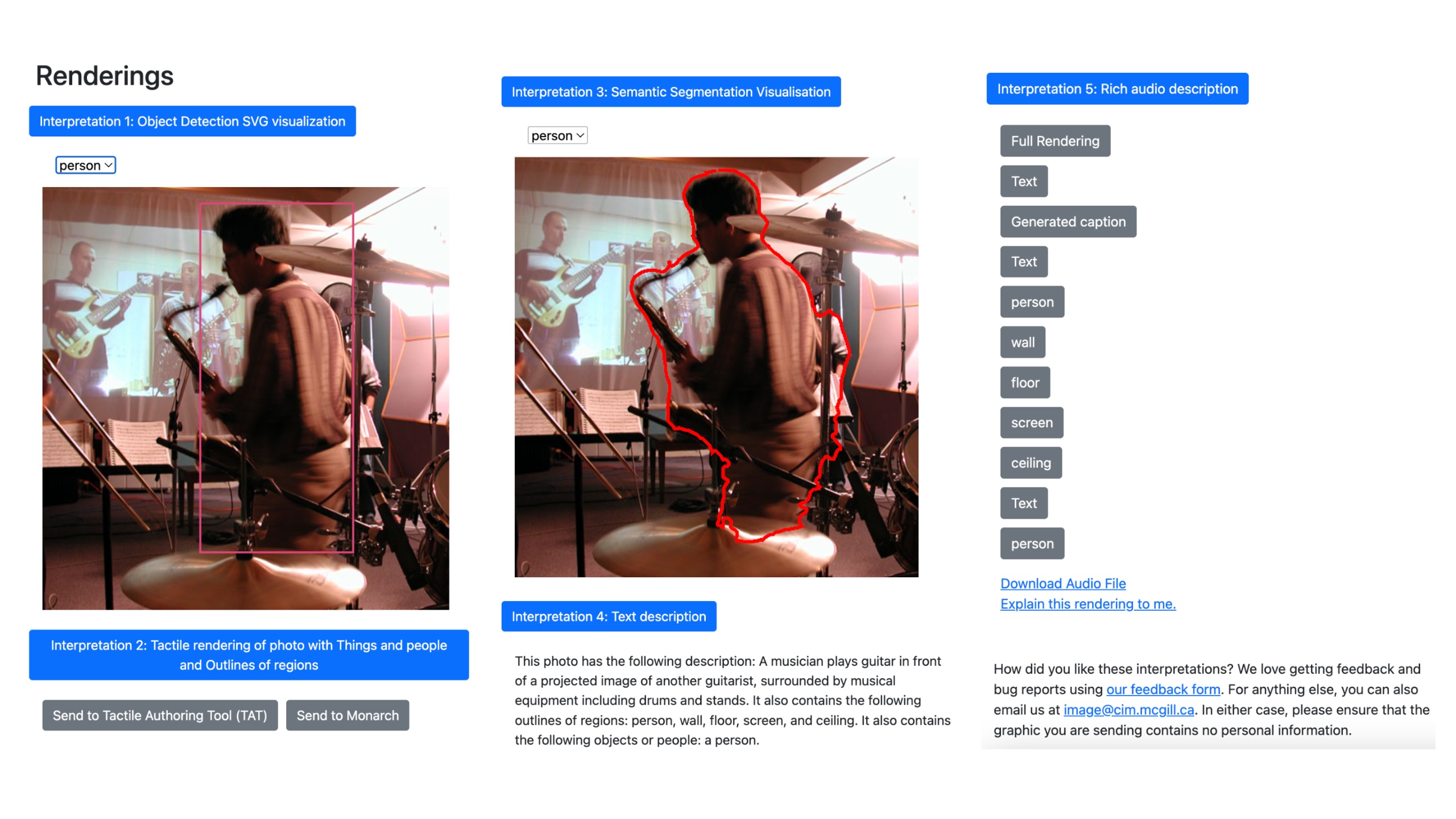

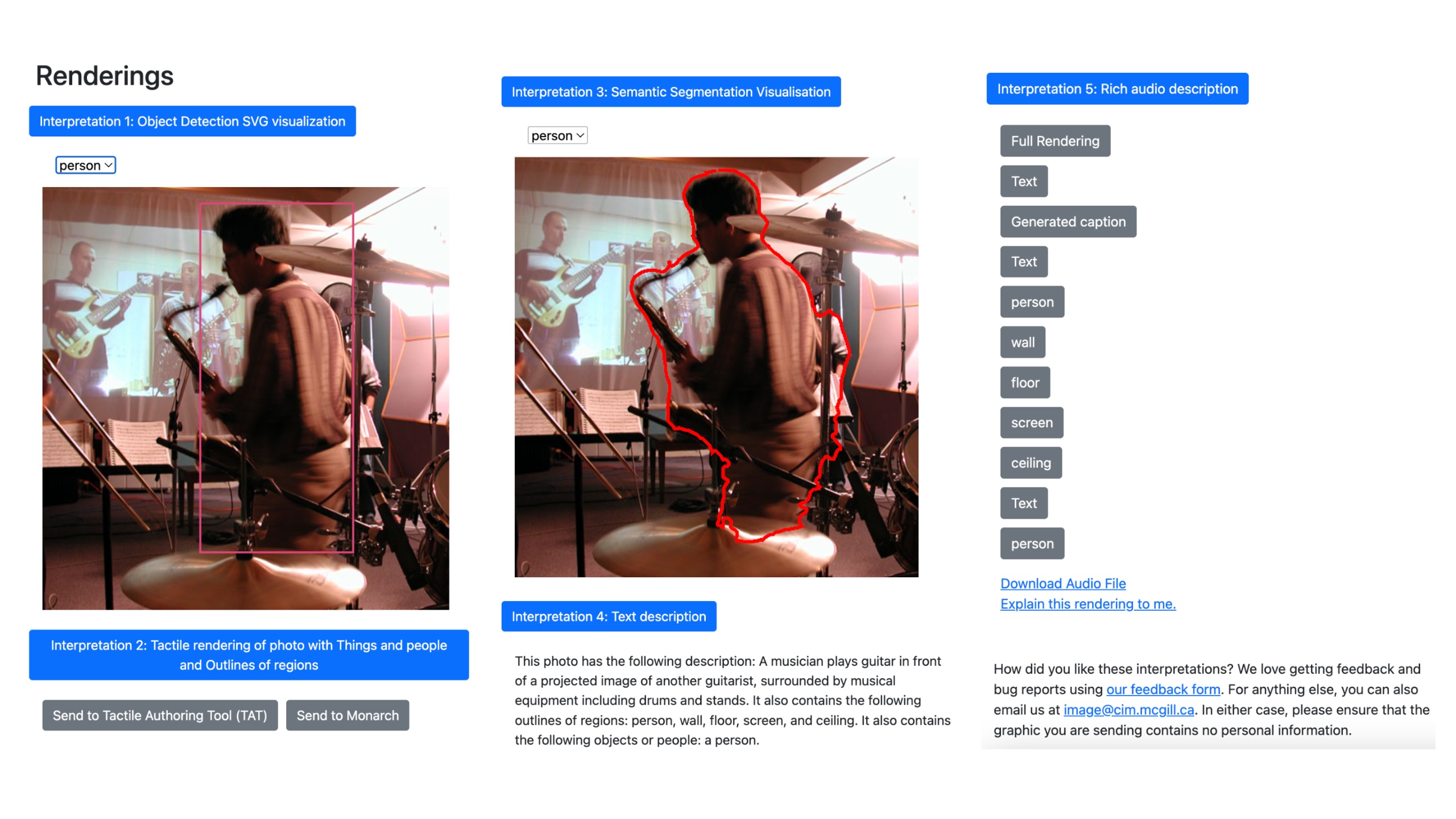

If you are blind or low vision, have you ever wanted to hear a photograph or pie chart, and not just a description of what is in it? The IMAGE project (Internet Multimodal Access to Graphical Exploration) adds controls to graphics in your browser so you can activate it on photographs and some charts and maps, on any webpage you visit. You will then receive experiences that combine spoken words with other sounds you hear around your head, that indicate details such as where things are, how large they are, or other information depending on the graphic. We are also working on touch experiences using the Humanware Monarch braille pin array tablet, but those IMAGE experiences are not yet released.

To try IMAGE right away, jump to the tutorial, or install the browser extension from the IMAGE Project Chrome webstore page.

We know that our small team will never be able to create experiences for all kinds of graphics, so we have built IMAGE to be modular (Docker microservices) and fully extensible. You can create your own microservices, building on top of the ones already created, to focus on just the content and experiences that matter most to you. Then you can run your own IMAGE server that services client requests, and use it by entering the URL of your own server in the IMAGE browser extension options. IMAGE is an open source project, with github repos for Server components and our Chrome browser extension.

For detailed information on the IMAGE architecture, read our paper IMAGE: An Open-Source, Extensible Framework for Deploying Accessible Audio and Haptic Renderings of Web Graphics to get an overview of the system as a whole, then consult the wiki for the IMAGE server repository for more specific implementation details. We are happy to receive pull requests. Please see our Contributor License Agreement.

If you are thinking about using IMAGE as a foundation for your project, we would love to hear from you, since we would be delighted to see IMAGE become a playground for researchers, developers, designers, and others to create and deploy new ways to help make web graphics more accessible.